Difference between revisions of "Software Testing/Meeting Notes 2019-04-08"

| Line 68: | Line 68: | ||

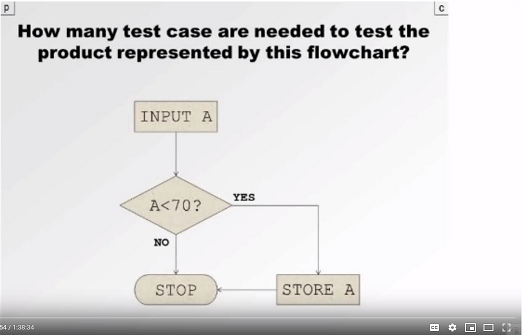

* Even the simplest test "is A < 70 ?" can have seven or eight tests | * Even the simplest test "is A < 70 ?" can have seven or eight tests | ||

| + | [[File:FlowchartExampleResized.png]] | ||

** Test results, but also overflows, boundaries, different data types | ** Test results, but also overflows, boundaries, different data types | ||

** Input validation can require many tests | ** Input validation can require many tests | ||

Latest revision as of 21:25, 2 May 2019

Software Testing

- Date

- Monday, 8 April 2019 Year from 7:00pm to 9:00pm

- Meetup Event

- https://www.meetup.com/NetSquared-Kitchener-Waterloo/events/260073069/

- Location

- Room 1300 -- Conrad Grebel University College, 140 Westmount Rd. N., Waterloo, Ontario Map

If you work in software development, how much of your job involves testing? If you're a project manager, do you work closely with the testers to keep an eye on their results and bug reports as a project progresses? If you are a developer, do you do your own unit testing and work with testers on test plan reviews and fix the bugs they find when they do integration and regression testing? If you are a software tester, how do you balance the need to be thorough with the need to deliver on time? How does that affect writing test plans? When a project team has to deal with a lot of changes in the middle of development and testing, how do you cope with updating test plans with limited time? Since you should be considerate when reporting bugs in software, especially when people have worked hard on it, how can you do this tactfully? How do testers work with people like technical writers who use test plans as a reference when writing documentation like user manuals?

Nicholas Collins, a long-time member of KWNSPA and a professional software tester will give us an overview of the deep art of testing software.

--Bob Jonkman & Marc Paré

Resources

- TechSoup Canada catalogue: Developer Software

- OBS Project - software that is good for capturing videos of tests including drop menus

One of James Bach's talks on YouTube

- Software Testing Additional Notes - Nicholas Collins' presentation notes

Meeting Notes

- Introductions

- Nicholas Collins

- Software tester for a few years, knowledge of how his company works

- But two years isn't a long time compared to some software testers

- Nick has prepared notes, will be presenting slightly differently from other KWNPSA sessions

- SysAdmin in insurance industry; laid off (as are many of us); back to school to upgrade IT skills

- Uses Visual Studio, C#, other languages

- People he's met were developers, or business-specific skills; when software testers are needed these people are thrust into the role

- This might change as more universities offer software testing as a major

- There are very few courses or certificates in software testing, more prevalent in the US

- but Fanshawe college in London has a certificate program

- Some institutions have a couple of courses in tech writing, project management, quality management; maybe a night course in software quality testing

- Without academic rigour, different people use different terminology, nomenclature

- "Should I know what all these different terms mean?" But it's fairly common with other software testers Nick has spoken to

- At Microsoft, developers use their development skills to write tests. Needs more skills than just coding

- Microsoft has internal courses to train testers how to test software

- Get promoted to full developer once you've proven you can write tests

- people use Terminology like "Post-mortem" (although nobody dies), mix up "Milestone" and "Benchmark", &c.

- Software testing is the start for a developer's career, then to DevOps

- Does this mean the most junior, inexperienced programmers are responsible for testing software?

- Nick: large companies use junior testers to run tests, senior testers to supervise

- During an upgrade Nick (a programmer at the time) did testing for the Database Analyst

- But a junior intern was assigned to that role as well, just to gain experience.

- Worked out details at a high level, then applied tests to get results

- Project Managers take different approaches

- You can always think of more tests

- It's a fine balance between staying on schedule and being thorough

- Walkthroughs and working in a team can be helpful

- Some testing instructors do not like teaching from texts

- eg. "Software Testing" by Yogesh Singh

- But Nicholas gets good ideas from texts, doesn't agree with those testing instructors

- THe problem is that the authors suffer from "Perfect Worldism"

- A world where there is unlimited time and money, and the perfect tests can be developed

- Nicholas has experience with sticky problems, gets ideas from texts to adapt to his problem

- Party talk: Software tester does not lead to stimulating conversation

- YouTube presenter on software testing is not dull! https://www.youtube.com/watch?v=ILkT_HV9DVU

- Even the simplest test "is A < 70 ?" can have seven or eight tests

- Test results, but also overflows, boundaries, different data types

- Input validation can require many tests

- Working with other people, eg. technical writers

- For them to understand the software they'll play with the software, and may create unanticipated conditions

- Everyone can be a software tester to some degree: Project manager, developer, writer. Even sales?

- Sometimes testers find problems with usability as they're running tests; not part of the test suite

- How effective are some of these ad-hoc testers?

- Is there a bias? Do they have some incentive to pass tests even when there are problems?

- Sometimes a QA will hold back tests that would have been better to give to the developer in the first place

- Accessibility testing is a new skill for QA, may become a testing requirement

- Business Analyst (BA), developer and tester make a good team

- Sometimes the process of testing will identify the need for more testing

- Is there a bias? Do they have some incentive to pass tests even when there are problems?

- Reporting bugs

- Requires consideration, tact

- Test plans may need to be developed quickly

- But near the end of a project when time is tight there may not be time to develop tests

- So quality of code may suffer near the end of the project

- Breaking things during testing that no-one has time to fix

- Automated testing?

- Nick has experience with automated regression testing

- Automated regression testing reduces the introduction of new bugs

- Open Broadcaster Software

- Used to catch all activity during user testing

- Also use Virtual Box recorder uses host to capture all the output on the VM screen

- "Monkey Testing"

- Also "fuzz testing" or "fuzzing"

- Fill all fields, try to overflow, pound on the keyboard, click as fast as possible

- But this this does not lead to reproducible errors (fine timing errors)

- Although some testers claim they can reproduce

- Pride in finding bugs?

- Nick finds that the "high five" time should occur only after the entire team has identified, reported, documented, and fixed the error, and re-tested

- Load testing

- Hitting a system with a large number of transactions, &c.

- But a bogged down system may not be writing to logs, making analysis difficult

- A benefit in load testing is adding assertions, find issues with threads

- Assertions and Singletons...

- Be sure to validate the output even when just testing for capacity

- Nick has written a test for XML testing

- But the code Nick wrote was not well tested at all! Oh, the irony!

- Q: Do you use debugger software like GDB to examine the flow of code?

- A: Not common, but becoming more prevalent.

- Certainly having a debugger to throw at the code is nice to have

- But much testing is done with the software under test as a black box, just examine the input and the expected output

- Nick speaks of the complexity of software testing.

- One thing works fine by itself, and other thing does too, but do they work together?

- Different software on different platform needs to interoperate, but sometimes differences in date formats causes problems

- although each platform by itself passed all tests

- Dealing with currencies, eg. USD and CAD, and GBP

- Dealing with leap years and 29 February

- General rule: Anything date sensitive needs to test for leap years

- and time zones! Anything dealing with calendars needs to worry about time zones

- What happens internationally when different countries need to interoperate?

- Companies have service contracts that define how the service is implemented

- If the system is changed, the contract defines who is responsible for continued interoperation

- If I make a change and it breaks your system, it's your fault for not defining the contract accurately

- called "spring contracts"

- Companies have service contracts that define how the service is implemented

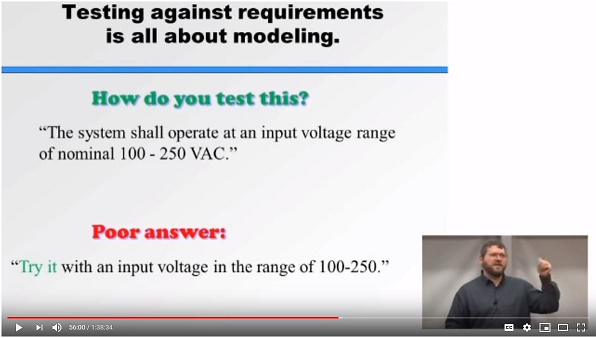

- Nick gives an example from James ---- YouTube video ("nominal input voltage is 100VAC to 250VAC")

- "Test the nominal range" is an incomplete answer

- Also need to test outside the range

- The user manual may give advice not to go outside the nominal range, but users don't necessarily read the manual

- So, does the system fail gracefully outside the nominal range?

- This is the function of the software tester, to design the test to ensure that software or equipment is failsafe

- eg. for medical equipment

- How much money is available to fry the device under test? Some prototypes may be really expensive

- Many examples of people damaging electronics with incorrect application of voltage!

- It's good for testers to think outside the parameters of the system

- Testing to ensure system has a consistent look and feel

- eg. fonts on some menus were different

- Is that a software testers responsibility? Sometimes as an additional task

- There are tools (overlays, templates) to find these issues

- Window resizing can make the application fail, but there need to be limits for those tests

- Testing for "greyed out" functions can be time consuming

- When a function is available when it shouldn't be can result in errors

- These are general things for a tester to keep in mind

- eg. fonts on some menus were different

- Systems that have features which have little to do with each other

- Easy to test they're not contending for resources, &c.

- But still important to run these features simultaneous to shake loose bugs, eg. memory allocation, concurrent DB access

- Perhaps a simple monitor with limited functions: But what if something goes wrong, does the device report an error?

- Client-side data validation: All testing needs to be duplicated at the server to ensure malusers don't bypass client-side validation

- But that increases load on the server

- Logging

- Logs may indicate problems with the way the code executes, eg. repeated log entries indicate an invalid loop

- Circular reasoning: How can the logs from software under test be considered

- Logs are only one step, begin the process of analysis

- NewRelic will test user experience (surveillance software)

- Nick has found bugs because the test suites are well designed

- But at least half the time the bugs discovered were found in spite of the test, which was not designed to find that kind of bug

- Q&A

- Is the developer + tester model usable?

- May be a bit scary for shops not set up for that collaborative arrangement

- Nick says to just forge ahead.

- Having experience is good, but can also develop that experience in-house

- Worries about the coming requirements for accessibility for software

- May take changes in coding practices (use POSH: Plain Ol' Semantic HTML instead of Javascripted forms)

- Jurisdictional differences may be difficult to deal with

- Is the developer + tester model usable?

Back to: Software Testing